Enterprise AI rarely fails in dramatic ways. It doesn’t usually crash with a warning banner or stop an operation entirely. Instead, it fails quietly. A recommendation becomes slightly off. A forecast drifts just enough to be ignored. A system keeps running long after it should have asked for help.

Most organisations don’t notice these moments immediately. They notice them months later, when costs rise, confidence drops, or teams start bypassing the system altogether.

This is the uncomfortable reality of AI at scale: failure is not an exception. It is a condition. And systems that are not designed with that assumption tend to break trust faster than they break code.

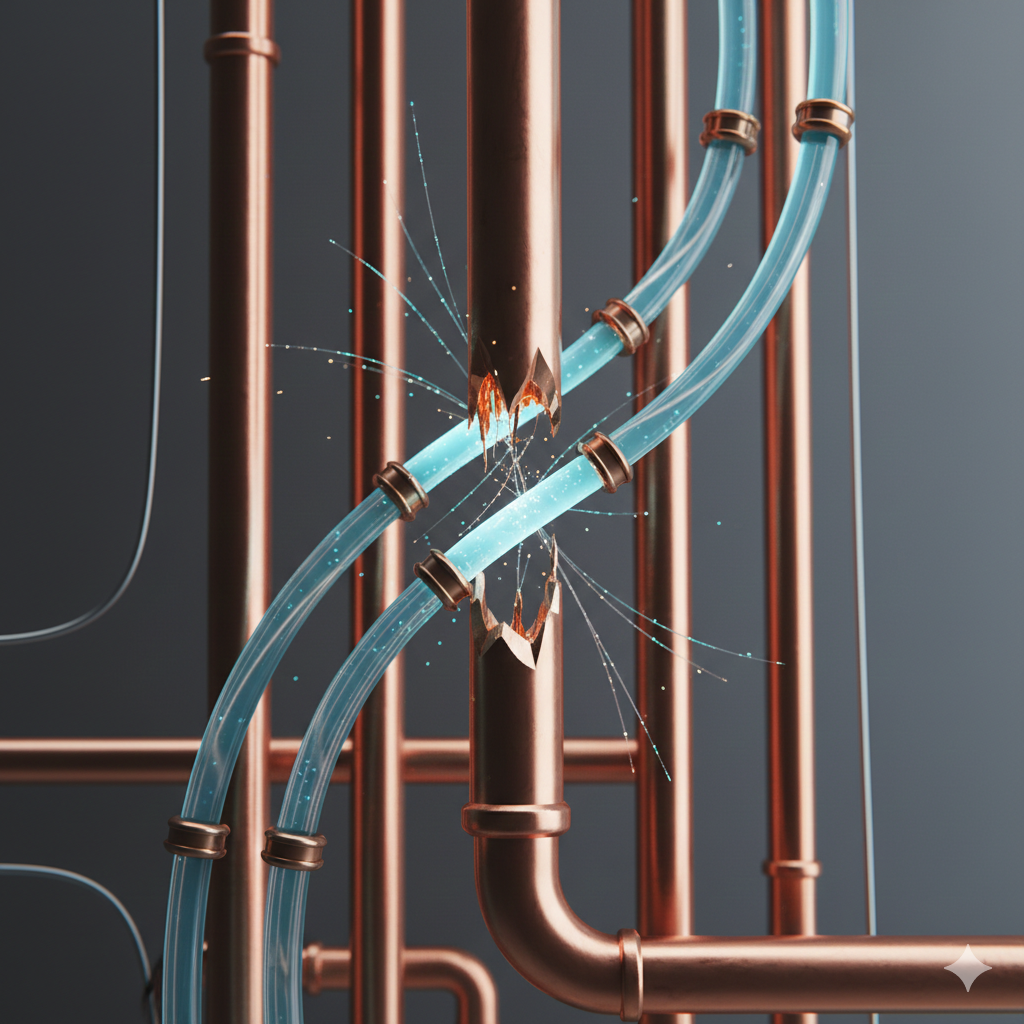

Enterprise AI Lives in Unstable Environments

Unlike consumer applications, enterprise AI operates inside environments that are constantly shifting. Data sources change ownership. Processes evolve. Legacy systems coexist with modern ones. People override workflows when deadlines tighten.

In these conditions, the idea of a “stable input” is mostly theoretical.

A forecasting model trained on last year’s demand patterns may still technically function today but the context around it has changed. Supplier behaviour shifts. Energy costs fluctuate. Regulatory constraints tighten. The model hasn’t failed mathematically, but operationally, it no longer understands the world it’s advising on.

That gap is where most AI failures begin.

Accuracy does not mean Reliability

This environmental instability raises a crucial distinction: accuracy does not necessarily mean reliability.

Many enterprise AI initiatives still measure success through accuracy benchmarks. While those numbers matter, they don’t answer the question decision-makers actually care about: Can this system be trusted when conditions are unclear?

A model that generalises only when data follows an exact pattern is inherently robust. But in practice, robustness is less valuable than high performance.

This is why experienced teams value models that:

- Recognise uncertainty

- Slow down when signals conflict.

- Expose confidence levels rather than hiding them

Systems that pretend to be certain in uncertain conditions tend to cause the most damage.

Failure Should Trigger Adaptation, Not Silence

One of the most dangerous behaviours in enterprise AI systems is silent failure. When predictions degrade without triggering alerts, teams continue operating under false confidence.

Designing for failure means building systems that respond when assumptions are broken. That reaction does not always need to be dramatic. Often, it simply means narrowing the scope, escalating to human review, or reverting to a more conservative approach.

In manufacturing, this might look like pausing automated optimisation when sensor data becomes inconsistent. In eCommerce, it may involve freezing pricing recommendations during abnormal demand spikes.

The key is intentional restraint. Good AI knows when not to act.

Human Oversight Is a Design Feature, Not a Backup Plan

There is a misconception that human involvement indicates weak automation. In reality, the most durable AI systems are built with structured human intervention baked in from the start.

This doesn’t mean constant supervision. It means:

- clearly defined escalation paths

- explainable signals for review

- shared accountability between system and operator

When people understand how and when an AI system asks for help, trust grows. When they don’t, they disengage or override it entirely.

Enterprise AI succeeds when it complements judgment, not replaces it.

Many Failures Are Organisational, Not Technical

Some AI systems fail even when the technology works exactly as intended. The breakdown happens elsewhere.

There could be differences in opinion regarding the application of outcomes. Rewards could favour quick delivery over correctness. Responsibility regarding errors in AI outcomes might be in question.

In such scenarios, the scapegoat is the model itself, which is blamed for problems within the system.

Building a failure-capable AI involves coming to terms with these facts from an early stage. AI architecture is important, but so are a clear decision boundary, a shared understanding of what success means, and proper governance.

Learning From Failure Is Where Real Value Emerges

AI systems that improve over time do so because failures are treated as learning inputs, not liabilities. Logs are reviewed. Edge cases are examined. Feedback loops are tightened.

This requires discipline. It also requires cultural maturity. Teams must feel safe acknowledging when systems underperform, without rushing to assign blame.

Over time, this approach creates AI that reflects real operational complexity not just theoretical design.

Why This Matters More Than Ever

The greater the involvement of artificial intelligence in business decision-making processes, the greater the failure cost. Simply because artificial intelligence systems are well-intentioned but irresponsible, while they are trustworthy.

The organisations at the forefront of the next wave of AI will not be ones trying to be complex for the sake of complexity. It will be ones developing a system that bends, not breaks ones that understand uncertainty and work to live with it.

Designing AI for failure is not about lowering ambition. It is about building systems that last.

Final Thoughts

Enterprise AI doesn’t have to be perfect. Enterprise AI must be honest about its knowledge and its ignorance, and when to just butt out.

The companies that understand this are not just building better systems. They are building durable intelligence.