We help companies create state-of-the-art multimodal AI solutions that accelerate automation, improve system intelligence, and integrate structured and unstructured data. We are a trustworthy multimodal AI development company that offers scalable solutions that adapt to complex business needs.

Modern organizations need a lot of unstructured data, such as speeches, documents, photos, and more. Traditional models treat these inputs independently, producing insights that are disjointed. Multimodal AI development addresses these issues by combining various data types into a single intelligent system. The results include smarter automation, faster decision-making across the organization, and improved user experience.

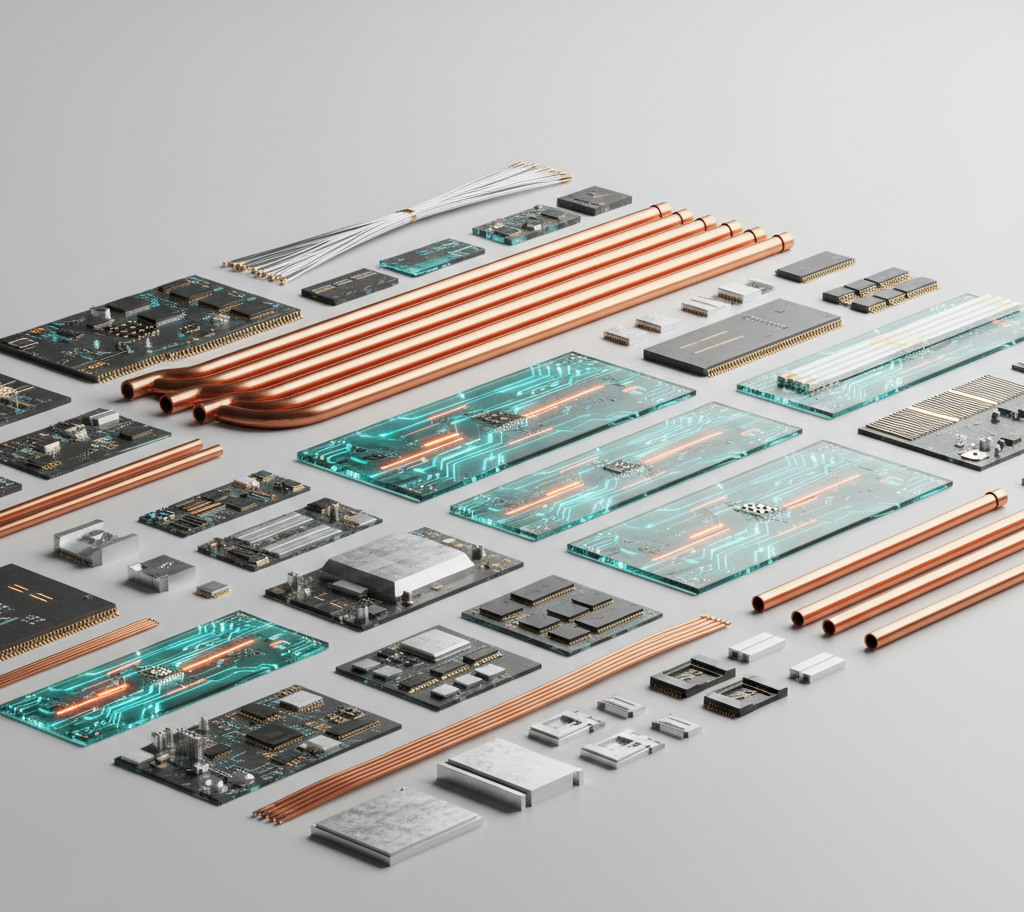

Multimodal systems are no longer just experimental; they are now making a big difference. The multimodal AI market is anticipated to reach a value of over $2.5 billion by 2030. We help companies stay ahead of the curve with scalable solutions built on distinctive architectures that combine language, vision, and sound. We design systems that do more than just interpret.

Our multimodal AI solutions offer deeper insights by combining information from text, images, audio, and video to generate context-aware responses and actions.

We integrate structured and unstructured data from various modalities into coherent frameworks to facilitate seamless processing and more thorough analytics.

Dynamic input/output production can be made possible by AI systems that connect multiple modalities, such as audio-to-video or image-to-text.

Tailored multimodal AI development solutions trained on private datasets for use in financial, healthcare, and retail applications.

Integrate and enhance big language models with visual and aural capabilities to enhance multimodal AI agents and content production through LLM development.

Because our multimodal AI services process multiple data streams in real time, they are ideal for surveillance, customer interaction, and Internet of Things systems.

Our AI systems mimic human sensory comprehension by interpreting tone, emotion, images, and context to make more accurate and natural decisions.

Accessibility and user interaction are improved by multimodal interfaces that can understand voice, text, gestures, and images.

By analyzing data from multiple data types, our multimodal AI generates more reliable, consistent, and bias-resistant results for enterprise-grade use cases.

You can achieve growth with the help of specialized generative AI consulting services that enhance operations and encourage creativity. Our generative AI consultants assist businesses in successfully implementing AI and achieving measurable results.

We provide strategic guidance to help businesses deploy, integrate, and maximize multimodal AI solutions that support their goals.

Integrate text, images, audio, video, and both structured and unstructured data into a single framework for more thorough analytics and insightful information.

We create artificial intelligence (AI) systems that can understand and react to questions about images and videos, offering accurate, contextually aware insights from visual content.

Make AR/VR experiences and interactive systems that respond naturally to speech, text, gestures, and images to engage users.

To enhance and speed up content workflows, use multimodal AI to automate image descriptions, video summaries, captions, and synthesized media.

For improved performance and practical insights, integrate multimodal AI into dashboards and business systems and offer scalable, industry-specific AI models.

Prioritize trust and ethical AI practices by making sure AI models are developed in a fair, transparent, and industry-compliant manner.

Integrate massive language models with multimodal capabilities to interpret text, audio, images, and diagrams to enable more sophisticated context-aware applications.

Manage every stage of the AI lifecycle for fully functional, integrated multimodal AI systems, from developing strategies and models to deploying AI from start to finish, including monitoring and optimization.

Instead of just following trends, we choose the right models based on your goals. Every solution we create uses proven adaptive AI models that learn from real-world data, adapt while in motion, and grow with your business.

Step 1

We begin by collecting data from a range of modalities, such as text, images, audio, and video, that is tailored to your use case. This ensures a sizable, diverse dataset that captures context and interaction from the real world.

Step 2

Each type of data is processed using specialized methods: videos are divided into frame sequences, text is tokenized and vectorized, audio signals are transformed into spectrograms, and photographs are scaled and normalized. These steps prepare the inputs for feature extraction and ensure consistency across modalities.

Step 3

We employ task-specific models (e.g., CNNs for images, transformers for text, or audio encoders) to extract valuable features from each modality independently while preserving their unique structures and insights.

Step 4

The gathered features are then integrated using advanced fusion networks, like attention-based models or multi-stream transformers, to produce a coherent representation that takes into account the interactions between modalities.

Step 5

Because the fusion model has been trained to analyze contextual data across modalities, it can detect intent, sentiment, or patterns more precisely. This leads to improved performance on tasks such as categorization, retrieval, and generation.

Step 6

Our output modules transform the fused data into insights or predictions that can be used for visual querying, speech recognition, content creation, or multimodal search.

Step 7

We use domain-specific datasets to improve the model’s accuracy and relevance. Our process ensures that the solution adapts to your business environment while maintaining the general capabilities of core models

Step 8

Last but not least, we use applications, APIs, or internal tools to provide the solution with a secure, intuitive interface so you can start using multimodal inference in real-time across your processes.

Work with Ratovate, a respectable multimodal AI development company, to turn complex data into real-time intelligence. From design to implementation, we help you create multimodal systems that are safe, scalable, and highly effective, tailored to the needs of your sector.

Ready to turn your ideas into reality? Ratovate is here to help. Get in touch with us today, and let’s create something extraordinary.

Sales and general inquires

Want to join Ratovate?